PDFreak AI

Explainable PDF Malware Detection for Resource-Constrained SOC Teams.

Intro

PDFreak AI is a modular, privacy-preserving system that detects malicious PDF documents and generates analyst-friendly threat reports. It combines static + dynamic analysis, a lightweight Random Forest binary classifier, and a locally hosted LLM with Retrieval-Augmented Generation (RAG) to produce explainable results with MITRE ATT&CK mapping and SOC recommendations.

Built for: Small SOC teams (low budget, low time, high alert volume)

Core idea: Binary detection first → deeper LLM reporting only for malicious PDFs

Offline by design: No external API calls; runs locally for privacy and compliance

Stack: Python + FastAPI, Random Forest (scikit-learn), Mistral 7B + RAG (FAISS, LangChain), React + Tailwind, Docker, Azure VM

Problem

PDF files remain a common malware delivery format because they can embed scripts, links, and payloads while still appearing legitimate. Traditional signature-based approaches struggle to generalize to obfuscated or evolving threats, while many advanced approaches reduce transparency and require heavy infrastructure. The goal of this project was to build a solution that is automated, explainable, and practical to run locally in constrained SOC environments.

Solution Overview

PDFreak AI follows a staged pipeline: PDFs are analyzed using a combined static + dynamic feature extraction process, transformed into a structured feature vector, and classified by a Random Forest model to produce a malicious/benign verdict. Only files flagged as malicious are passed into a RAG-enhanced, locally hosted Mistral 7B module, which generates a multi-class threat label, MITRE ATT&CK technique mapping, and SOC response guidance.

How it Works (Architecture)

Above diagram illustrates the full pipeline, from initial data collection to final web-based report output—highlighting how tools like PDFiD, Ghidra, FAISS, Mistral 7B, and MITRE ATT&CK integrate into the system for robust, explainable malware detection.

Key Engineering Decisions

Random Forest was chosen for its balance of performance, interpretability, and efficiency. It handles heterogeneous PDF features well, resists overfitting on small datasets, and exposes feature importances that support explainable security decisions. It also trains and runs quickly on low-resource systems.

Running a lightweight binary classifier first ensures speed and efficiency. Only malicious PDFs trigger the heavier LLM pipeline, which preserves compute and reduces latency. This architecture prevents unnecessary load while delivering rich analytical insights only when needed.

Local deployment ensures privacy, compliance, and safety. Because malware samples and sensitive documents never leave the environment, the system avoids cloud-based data leakage risks. Running everything locally also guarantees predictable performance and supports offline SOC operations.

Results and Performance

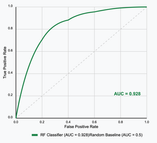

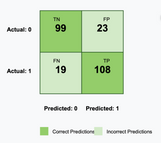

Binary Detection Accuracy — 83.1%

Random Forest achieved strong, stable performance on unseen PDFs, driven by features such as entropy, JavaScript analysis, and embedded object behaviour.

Multi-Class Classification — 79.8%

RAG-powered LLM correctly identified malware types and ATT&CK techniques with high alignment to analyst playbooks.

Explainability Verified

SHAP & LIME confirmed feature contributions and validated that the system’s decisions were interpretable, not black-box.

Operational Reliability

Low-latency detection (~2s per file), offline-friendly FastAPI deployment, and clear analyst-driven UI design

Explainability & Trust

Modern security automation must never feel like a “black box.”

PDFreak AI was designed to provide clear, defensible reasoning behind every decision.

Why Explainability Matters

Security teams need to understand why a file was flagged, especially when remediation actions involve user disruption or device isolation.

PDFreak AI exposes the features and behaviors that influenced each verdict, giving analysts confidence in automated decisions.

How Explainability Works in PDFreak AI

Feature Importance (Global Insight)

Random Forest exposes the strongest predictive signals — such as JavaScript activity, entropy spikes, or suspicious object structures — allowing analysts to understand system-wide reasoning.

SHAP Analysis (Model Transparency)

SHAP values show how each feature contributes to the final decision. This confirms the model is using meaningful indicators rather than noise.

LIME (Per-PDF Justification)

LIME explains individual file decisions, highlighting exactly which attributes pushed the model toward “malicious” or “benign.”

This is especially useful for edge cases and ambiguous detections.

LLM-Generated Reasoning (Analyst-Friendly Narrative)

For malicious files, the RAG-powered LLM produces a human-readable summary that outlines:

observed behaviours

likely intent

mapped MITRE ATT&CK techniques

recommended next steps

This bridges the gap between raw technical output and SOC analyst workflow.

Deployment & UX

PDFreak AI was built to run securely, privately, and efficiently in environments where sensitive files cannot leave the network. The entire system is packaged as a lightweight, locally deployed web application that analysts can use with minimal setup and zero external dependencies.

Local, Secure Deployment

The platform runs fully offline using a containerized architecture:

FastAPI backend for classification, feature extraction, and LLM orchestration

Mistral 7B (local) for explainable threat reporting via RAG

Docker-based deployment for reproducibility and portability

No cloud calls — all processing happens inside the analyst’s environment

This ensures privacy, compliance, and predictable performance, even without internet access.

Fast, Simple Analyst Workflow

The UI was designed to eliminate friction during investigations:

Upload a PDF

Instant malicious/benign verdict

If malicious → LLM-generated, human-readable report

View MITRE techniques + SOC recommendations

Clean typography, high contrast, and a single-file workflow make it intuitive for both junior and senior analysts.

Backend Orchestration

The backend coordinates all components:

Validates PDF input

Runs static/dynamic feature extraction

Calls the Random Forest model

If malicious, triggers the RAG module

Returns structured JSON for the UI

This modular design allows future upgrades without rewriting the system.